Autonomous TEE Manifesto

Abstract

A spectre is haunting the world — the spectre of crypto. All the powers of the old world have entered into a holy alliance to exorcise this spectre.

Two things result from this fact:

I. Crypto is already acknowledged by all state power to be itself a stateless power. The promise of crypto, however, is at stake: both censorship resistance and decentralization have been compromised by centralizing forces in protocol design and in legacy institutions.

II. It is high time that cypher-punks should openly, in the face of the whole world, publish their views, their aims, their tendencies, and meet this nursery tale of the Spectre of Crypto with a manifesto of the movement itself, to change the tides of time.

To that end, cypher-punks from different territories have assembled to sketch the following manifesto, to be published as a free and open-source document. The following document outlines a vision of an open-source software and hardware architecture for confidential computation, particularly Trusted Execution Environments or TEEs.

In what follows, we outline our vision for each of the different parts of the technological substrate necessary to bring autonomous TEEs into being.

Seizing the Means of Computation and Compilation

In order to seize the means of computation and compilation we require a permissionless digital and physical architecture for computing over data that is verifiable, attestable, and, importantly, confidential. That is, we must seize the material means of computation in such a way where the data and the integrated circuits on which the data is computed are constructed and distributed in such a way where collusion and censorship become close to impossible.

To that end, we hereby propose to start by creating Autonomous TEEs that are non-proprietary, using free and open-source software and hardware, auditable by the communities they serve. We also investigate how quantum physics, PUFs, and nonlinear optics can enhance the security of Autonomous TEEs, especially against physical attacks. The problem that TEEs aim to solve is that of secure remote computation. This refers to executing software on a remote computer owned and maintained by an untrusted third party, with some integrity and confidentiality guarantees. Current TEEs cannot defend against physical chip attacks, such as those performed using focused ion beam microscopes, making contacts, and microprobing (See the section on Physical Attacks). Although some of these techniques are quite expensive, they are employed to hack high-value targets, aiming to obtain access keys, secret codes, proprietary data, or other secure and sensitive information. Once an attacker has the secret keys it can emulate the TEE and the attestation process without being noticed.

A strategic path towards autonomous TEEs necessitates gradual incremental tactical improvements to the current software stack architecture of enclaves like Intel’s SGX/TDX, as well as research and development into open hardware that potentially leverages quantum phenomena and PUFs. A roadmap is drawn from software to hardware, from short-term to long-term development of an alternative infrastructure and architecture, based on an immanent critique of the hegemonic and proprietary TEE stack.

First, we will outline the advantages and issues of current TEEs, then focus on various emerging components of the proposed technology, which are:

- Open Hardware

- Physical Attacks, PUFs & Quantum Physics

- Reproducible Builds

- Mitigation Tools

- Verifiable Log

- Verifiable Computation

- Formal Verification

- Remote Attestation

TEEs as trust anchors

We always trust people, whether we realize it or not. When we use technology, we trust that it was designed to function as intended. This trust extends to cryptography and software development. When we say “trust the math” or “trust the physics,” we are essentially saying, “Let’s trust the person implementing this technology to use mathematics and physics correctly to ensure its integrity.”

When discussing TEEs, we refer to a complex setup comprising various technological components. It’s more than just a chip (known as the HSM or Hardware Security Module) capable of real-time encryption/decryption. The environment also encompasses an expanded instruction set that the CPU can execute, encrypted memory allocated to it, and several attestation capabilities provided by third parties. TEEs prove invaluable when establishing a shared private state among different network actors.

The architecture of a TEEs comprises hardware components such as secure enclaves, secure boot processes, and memory protection mechanisms, along with software components like TEE operating systems and trusted applications.

Intel’s SGX, or Software Guard Extensions, encompasses several components:

- The CPU itself, which can execute an extended x86 instruction set that includes new operations such as logical and arithmetic operations, memory management, and the ability to jump between different locations for reading or writing. When the CPU processes instructions from this extended set, it allocates a portion of memory to an enclave.

- An enclave consists of:

- An instruction set

- A hardware security module either integrated within or external to the CPU

- An encrypted sector of memory

- Attestation capabilities are provided by third parties (such as cloud providers or smart contracts).

Intel’s SGX TEEs serve as a trust anchor for confidential computation to happen in a verifiable and attestable way. The question is, do we trust them? Can we do better?

The Problem with current TEEs

Intel’s Trusted Execution Environments, particularly SGX, have faced several technical issues and security exploits in recent years. The SGX.fail1 article effectively highlights examples of security vulnerabilities in projects running on SGX.

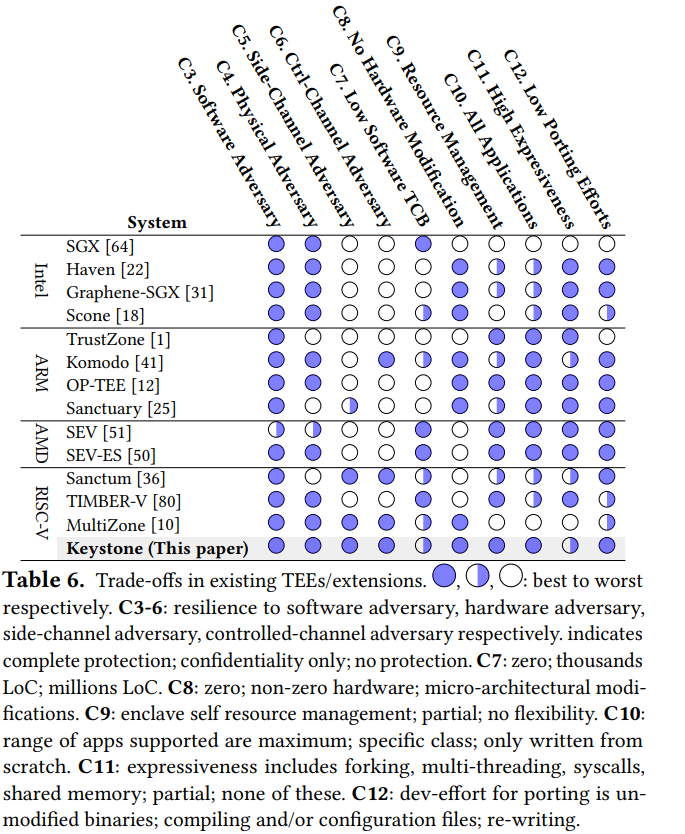

The trade-offs in the existing TEE or TEE-based systems are explained in detail in the Keystone article2. The following table demonstrates the different types of vulnerabilities and attacks, and how well each TEE system addresses them. Here, you can see how Intel SGX is vulnerable in various categories including side-channel and controlled channel attacks, and possible hardware modifications.

When using TEEs, it is essential to ensure that a system administrator runs specific software and that the software is known in advance. This process restricts the system administrator’s ability to perform arbitrary actions, allowing them to carry out only certain functions such as generating a key, sending the key, processing the input, and sending the result. This way, the only way to read data within the enclave is through physical attacks or interventions. The use of TEEs restricts the system administrator’s ability to perform arbitrary actions. However, a significant problem remains on how to prevent physical attacks.

Attestation by Signature and Its Discontents

Another issue with the current Intel SGX is the attestation is done by a signature, not necessarily reflecting the actual content. When software is loaded, the SGX chip hashes it and verifies that the software matches the expected hash. A signature of the hash with a date is then provided, along with a certificate stating, “I have loaded the software you provided me.” This signature is from Intel. Essentially, this means there needs to be trust in Intel to implement the software correctly. However, there is no guarantee that the issued certificate ensures the software will run as claimed, even though it is issued by Intel.

This poses a risk when trusted companies can be manipulated or abuse their trust. For example, an NSA agent could ask Intel to issue a certificate that falsely indicates the expected software is running, while in reality, it is NSA software capable of surveillance. This deception would go unnoticed. Therefore, there is a need for TEEs that are auditable.

1. Open Hardware

There have been attempts to make open-source TEEs in the past. The most notable of them that we have encountered so far is Keystone, an open-source enclave that uses a RISC-V instruction set. On the hardware side, there is Open Titan3. No one has been observed attempting to combine these two concepts. Probably in the short term, it makes more sense to use programmable chips to start testing things before moving onto semi-conductors and silicon.

Build your own TEEs, FPGAs, and more

We discussed issues at both the software and hardware levels, including vulnerabilities to side-channel and chip attacks. (see section below) Many projects have targeted software issues in TEEs on platforms like SGX, TrustZone, and RISC-V. However, some TEE problems stem from the hardware design itself and cannot be fixed solely with software. To address these hardware vulnerabilities, some projects have used hardware and software co-design infrastructure to create custom TEEs with Field Programmable Gate Arrays (FPGAs). CactiLab group from University of Buffalo, USA proposed a BYOTEEs4. They develop enclaves with tailored hardware Trusted Computing Bases (TCBs) and set up a dynamic root of trust. This ensures the untampered execution of Security-Sensitive Applications (SSA) from existing software on the main system while providing mechanisms to attest to the integrity of enclaves’ hardware and software stacks.

BYOTee secures against various attacks by isolating and encrypting enclave data, using priority interrupts to prevent DoS attacks, and verifying bitstreams to block malicious hardware IPs. It confines compromised firmware effects to individual enclaves and secures sensitive data against cold-boot attacks by using BRAM. Cache side-channel attacks are avoided by not time-sharing CPU resources, and power side-channel attacks are prevented by loading only trusted bitstreams. However, BYOTee cannot fully prevent sharing-based side-channel attacks on multi-enclave FPGAs. For more information, see CactiLab Publications.

There is another group from University of Minho, Portugal, by Sandro Pinto5, who are working on TEEs based on FPGAs as well. They created Trusted Execution Environments On-Demand (TEEOD) which uses reconfigurable FPGA technology to enhance security for critical applications. It creates secure enclaves within the programmable logic (PL) by deploying customized security processors dedicated to each application as required.

Meetgo and Ambassy, are other examples of successfully implementing the TEEs on FPGAs, presenting their use with applications including cryptocurrency wallets.

OP-TEE6 is another example of open-source FPGA-based TEEs, utilizing ARM TEE. Additionally, they have integrated PUF (Physically Unclonable Function) support to achieve secure key handling.

FPGAs are also combined with SGX,SGX-FPGAs 7, by bridging SGX enclaves and FPGAs in the heterogeneous CPU-FPGA architecture.

2. Physical Attacks, PUFs & Quantum Physics

Before we invest significant effort into creating an Autonomous TEE, we need to justify why we can’t simply replicate the architecture of Intel’s SGX, one of the most commonly used TEEs. Is it even possible to autonomize and produce it in an open-source manner?

Intel’s SGX cannot fully meet the verification requirements for Autonomous Open Source Hardware due to several trust and security concerns. Firstly, the system requires trusting Intel not to leak critical keys, such as the attestation key and provisioning key, as they have access to these during the manufacturing process. Additionally, there must be confidence that the manufacturing process does not compromise the Seal Secret key and that Intel does not attempt to extract the Seal Key after the chip is produced, as this is the only key they do not initially know. Moreover, there is the risk that anyone with access to Intel’s signing key could potentially sign a fraudulent quoting enclave that misuses the attestation key to produce fake quotes. These factors highlight that Intel’s SGX cannot provide the level of independence from potential key leakage and misuse required by Autonomous Open Source Hardware, where security and verification processes must remain unaffected by any stage of manufacturing or potential abuse of power. For more information, see Intel SGX Explained8 and qTEEs9 papers. This highlights the significant need to develop an Autonomous TEE that includes measures to prevent side-channel attacks, physical attacks, and chip-level attacks.

What are the possible Physical Attacks?

Physical attacks on computer chips can be classified by cost, which factors into the equipment needed and the complexity of the attack. Here are some of the examples of physical attacks stated in the Intel SGX Explained8 paper.

Denial of service (DoS) is the simplest, involving disconnecting power or network cables. Port attacks, such as cold boot attacks, where an attacker connects a device to an existing port, like a USB flash drive, to boot malicious software. Debug port attacks, targeting ports intended for development and diagnostics, are more expensive and generally ignored by secure architectures assuming these ports are disabled in consumer devices. However, manufacturers often preserve these ports to facilitate repairs.

Bus tapping attacks are more complex, involving devices that monitor or alter traffic on the computer’s motherboard buses. Passive bus tapping monitors traffic, while active tapping can modify it or replay commands to exploit systems relying on static signatures. Tapping costs increase with bus speed and complexity, with simpler buses like SPI being cheaper to tap than faster ones like DDR.

Chip Attacks - FIB milling

At a chip level, Probing attacks are among the most potent methods to breach security and extract sensitive information from embedded systems. This sophisticated attack necessitates specialized equipment including a focused ion beam (FIB) and expertise, making it very expensive. Attackers can access internal device signals at micro or nano-scale levels by employing a FIB station. This method effectively eliminates measurement noise, enabling the extraction of critical information like secret keys or encrypted data. Attackers typically target buses to read memory contents or combinatorial signals to capture sensitive intermediate values.

This process involves several tedious steps: removing the IC package using a corrosive chemical, delaying the chip, capturing the layout, bypassing protective shields through milling, making contacts, extracting data, and rerouting the circuit. These methods aim to physically disconnect some or all of the circuitry that implements security functions to access sensitive data. Additionally, signals can be injected to alter the execution of the embedded code. FIB also allows probing and monitoring of the internal signals of the circuit during its operation. This helps the attacker to locate sensitive signals more easily and access them by creating direct connections, bypassing security features. 10 11

There are multiple countermeasures to prevent these attacks. While it’s impossible to eliminate the risk fully, the attacks can be made more expensive, time-consuming, and complex by incorporating various measures during the manufacturing and design process. These include using different materials as shields, building active shields, implementing redundancy in core components, and using analog detection circuits. Novel techniques include creating weakening holes to cause die breakage during milling and applying wafer-level countermeasures on both sides of the packaging12. Another innovative method is building detection circuits that monitor for damage to the shielding metal wires 13 14.

Currently, the consensus is that chips cannot be fully protected against physical attacks and can only be made expensive to attack. But what if the adversary has unlimited resources? Does this mean a chip can never be fully secured?

There might be a novel solution using physical unclonable functions (PUFs). This technology enables the metallization of the chip itself to be fingerprinted during manufacturing. The fingerprint becomes an internal part of a secret key. If an adversary uses FIB to modify the chip’s metallization, the secret key would be corrupted, rendering the attacks unsuccessful. 15

There is a dialectical dynamic process between surveillance and the freedom derived from collective anonymity. We posit that to counter the surveillance states of the 21st century, we can integrate PUFs and quantum physics into TEEs to help us completely prevent unauthorized access to the information, regardless of the resources or capabilities of the attacker, including powerful entities with significant resources.

Let’s look into these possibilities together in the following sections:

Physical Unclonable Functions

According to Gao et al 202216, a physical unclonable function (PUF) is “a device that exploits inherent randomness introduced during manufacturing to give a physical entity a unique ‘fingerprint’ or trust anchor.

Physical Unclonable Functions (PUFs) can be leveraged to generate a root of trust for Trusted Execution Environments (TEEs) by providing a unique and unclonable identifier that can be used to establish the authenticity and integrity of the TEE. PUFs exploit the inherent physical variations in silicon or other physical properties to generate a unique and unclonable identifier for each chip. This uniqueness can be used as a foundational element for establishing a root of trust in the TEE.

Furthermore, PUFs can prevent FIB attacks because the chip’s functionality relies on its physical integrity. Any attack or damage to the PUF would permanently alter its unique function, which depends on the specific material composition and structure.

PUFs can be used for:

- Cryptographic key generation: PUF relies on the unique physical characteristics of the chip. These keys can be used to authenticate the TEE and secure its operations, serving as the basis for establishing a root of trust.

- Bootstrapping: during the bootstrapping process, the TEE can verify its own authenticity and integrity by leveraging the unique identifier or keys derived from PUFs.

- Attestation and Remote Verification: The unique identifier or keys generated from PUFs can be used for attestation purposes, allowing the TEE to prove its identity and integrity to external entities. This enables remote verification of the TEE’s authenticity and trustworthiness.

The intuition here is that by leveraging PUFs to generate a root of trust, autonomous TEEs can establish a strong foundation for security and trustworthiness, enabling secure and reliable execution of sensitive computations within the enclave.

As Sylvain Bellemare puts it17, we cannot hide atoms. According to Sylvain’s q-TEEs, “PUFs are arguably the current best hope to protect against physical attacks aimed at extracting secret keys (root of trust)”. More prototyping, research and development in this area is needed.

Towards the Quantum Realm: Q-PUFs

Quantum PUF devices utilize quantum mechanics and the inherent randomness from physical variations in the manufacturing process to achieve security. This randomness grants the device high min-entropy, ensuring robust security without the need for additional cryptographic properties or assumptions. A QPUF is accessed by submitting a quantum challenge, such as an electrical signal, an optical pulse, or a temperature signal, and receiving a distinctive response. This response is consistent for a particular QPUF token but varies greatly among different, similar QPUFs, making each token’s output appear random. Each QPUF also has a unique identifier representing the specific randomization used during its creation. 18

Quantum Readout PUFs19 explore the possibility of combining the quantum device with the challenges and responses that are classical data, which are still vulnerable to certain attacks in the quantum realm.

The quantum phenomenon has already been explored in the context of PUFs for a while now. This paper on the taxonomy of PUFs20 summarizes various types, including Quantum Electronic PUFs (Q-EPUFs)21, Quantum Optical PUFs (Q-OPUFs)22. Additionally, Comparison of Quantum PUF Models23 was discussed here to show the advantages and disadvantages of different approaches.

The paper “Exploring the limitations and possibilities of qPUFs”24 from University of Edinburgh defines qPUFs as quantum channels and formalizes the standard requirements of robustness, uniqueness, and collision-resistance for qPUFs guided by the classical counterparts to establish the requirements that qPUFs should satisfy to enable their usage as a cryptographic primitive. They introduced a new quantum attack technique based on the universal quantum emulator algorithm to prove no qPUF can provide quantum existential unforgeability. However, they have proved that a large family of qPUFs (called unitary PUFs) can provide quantum selective unforgeability which is the desired level of security for most PUF-based applications.

Quantum Dot PUFs (QD-PUFs)25 were also explored. They demonstrated the creation of optical-read tokens known as QD-PUFs. They found out that the response derived from dots’ emission in the token is stable for a finite period of time, which was tested using an authentication algorithm.

In conclusion, all of these methods can be considered to create highly secure Autonomous TEEs that leverage quantum phenomena and optics. By utilizing the inherent randomness and unique properties of quantum mechanics, these approaches offer a robust foundation for enhancing security in various applications.

What about non-linear optics?

Hardware is software. It is just software you cannot change. Instead of electronics, what if we were to rely on micro-photonic devices to compute operations? Can we leverage the non-linear optical properties of materials to improve TEEs?

Ising Machines26 have already been attempted, successfully solving Max-Cut problems. Here’s a video from Cal-tech explaining Ising Machines

3. Reproducible Builds and the Bureaucratization of Software

To understand reproducible builds or the functional deployment model, it is important to review what source code and binaries in software development mean.

Source code is the human-readable version of a program written in a programming language (Rust, C, Java), while binaries are the machine-executable version of the program generated from the source code through a process called compilation.

The relationship between source code and binaries is that the source code serves as the blueprint for creating the binaries.

Reproducible builds refer to the ability to generate the exact same binary output from a given source code every time it is compiled, regardless of who runs it.

This ensures that the build process is deterministic and independent of the environment (or computer) in which it is executed. In contrast to the functional deployment model, the traditional deployment model often results in non-reproducible builds due to dependencies on specific tools, libraries, or environments. This can lead to inconsistencies and potential security vulnerabilities in the deployed software. This is because of current software development practices and what we like to call the bureaucratization of software. People are used to deploying other people’s code without checking if the binaries correspond to the source code. Every time software changes because of development cycles, forks, and so on, this increases the likelihood of potential attack vectors. This problem is compounded by the growing reliance on AI and machine learning in software development. Modern software stacks often consist of interconnected components and dependencies, which can lead to cascading failures if one part of the system encounters an issue. The so-called software “stack” today is like a bunch of if functions piled up as towers of information, block after block, commit after commit, server upon server, where, if one fails, the whole tower goes down. Every software developer has had the experience of spending most of their waking time fixing bugs or problems with the deployment of a specific library rather than actually writing code. The bureaucratization of software transforms code into a complex system of rules and regulations. This can create uncertainty, as users may not always be sure whether the software they are running is genuine or counterfeit.

Another analogy to think about non-reproducible software is as a tower of poorly stacked bricks, where the consistency and integrity of the materials in each brick vary, and where they all depend on each other to stand. Compare this to a seed, which inherently knows what it needs to do within its DNA to grow into a plant. In that sense, reproducible and bootstrappable builds are autopoetic.

Overall, reproducible builds or the functional deployment model help improve the bootstrappability, integrity, and security of software deployment by ensuring that the build process is predictable and reproducible, similar to following a precise recipe when cooking.

The Autonomous TEEs hereby proposed is a poetic technology that relies on reproducible builds for creating the world’s most secure software supply chain for trusted enclaves.

4. Mitigation Tools

Developing mitigation tools for a trusted execution environment (TEE) involves a combination of software and hardware expertise. TEEs are secure environments that provide isolated execution for sensitive code and data, and creating mitigation tools for them requires a deep understanding of both security principles and low-level system architecture. As far as we know, Intel’s SGX SDK, DCAP, and Drivers are all open-source. The only piece within Intel’s SGX stack that is not open source is its mitigation tools. To have gradual incremental improvements to the security of current and future TEEs, having mitigation tools that are open source is a must.

There are currently two paths that we see towards fixing this problem: reverse engineering of their binaries or creating our own mitigation tools.

Reverse Engineering of Binaries

In order to reverse engineer the binaries, we require a combination of low-level programming languages (e.g., C, assembly) and binary analysis. The task on this quest is to understand and audit the logic of the binaries and see if there are any back doors, in order to reverse engineer them.

Autonomous Mitigation Tools

In order to create our own mitigation tools we essentially need a compiler engineer who has knowledge of binary analysis.

Mitigation techniques such as control flow integrity (CFI), data execution prevention (DEP), address space layout randomization (ASLR), and stack canaries can and should be explored and adapted for the autonomous TEE environment.

Testing and verification techniques for security-critical software, including static analysis, dynamic analysis, fuzz testing, and formal verification (via e.g. Isabelle and Candle) methods should also be researched and explored.

5. Verifiable Log and The Angel of History

A verifiable log is a directed acyclic graph (DAG) composed of pairs of input and output hashes for different build targets (toolchain, architectures, and operating system). An open-source TEE requires a verifiable log of input and output hash pairs to ensure the integrity and security of the computation being performed within the enclave and to help detect any unauthorized changes to the computation process. We call this chain of input and output hashes a blob chain, for lack of a better term. By maintaining a log of input and output hash pairs, the TEE can provide evidence that the computation was carried out correctly and that the output was derived from the specified inputs. This verifiable log can be used to prove to external parties that the computation was performed in a trustworthy manner and that the results are valid.

Thesis IX of Walter Benjamin’s concept of history27 gives us an image of the angel of history who is pushed in a maelstrom to fly towards the future with its face looking towards the past. The angel of history is like a verifiable log of events, flying in one direction towards the future, computing a blob of history as it flies.

6. Verifiable Computation

In the pursuit of a minimal system that can be auditable and autonomous, we want to create a minimal universal circuit that can be easily studied and implemented. A minimalistic chip design gives us a few options:

- LISP machines28 would be interesting, not just because it would take us back from the bifurcation in the historical trajectory of computers since the 60s and 70s, but because we could make a fairly simple and tailored TEE hardware on the basis of LISP. We could start by using Lurk to do LISP-based stuff which fits nicer in hardware - the first use case would be to put a zkvm in LISP and then see what happens.

7. The Exploits of a Mom and Formal Verification

Remote software vulnerability can theoretically be solved by using formal verification.

The options so far that we know of are :

Isabelle29 - interesting but top-down - “use our not formally verified formal verifier prover assistant”

Cake ML30 and Guix31 - more bottom-up.

8. Remote Attestation

This section is currently under development. Future versions of this article will include detailed information on this topic.

Thank you

We stand on the shoulders of giants.

We would like to thank Andrew Miller, Christopher Goes and Ethan Buchman for their kind support and thoughtful guidance.

Special thanks also to Sylvain Bellemare who has been banging on the PUF drum before anyone noticed.

Thanks to Plural Research for facilitating radical exchange on the future of decentralisation in Ethereum and beyond.

A better world is possible.

References

-

SGX.Fail. (n.d.). Retrieved July 4, 2024, from https://sgx.fail/ ↩︎

-

Lee, D., Kohlbrenner, D., Shinde, S., Asanović, K., & Song, D. (2020). Keystone: An open framework for architecting trusted execution environments. Proceedings of the Fifteenth European Conference on Computer Systems, 1-16. https://doi.org/10.1145/3342195.3387532 ↩︎

-

Open source silicon root of trust (RoT), OpenTitan. (n.d.). Retrieved July 4, 2024, from https://opentitan.org/ ↩︎

-

Armanuzzaman, M., Sadeghi, A.-R., & Zhao, Z. (2024). Building Your Own Trusted Execution Environments Using FPGA (arXiv:2203.04214). arXiv. https://doi.org/10.48550/arXiv.2203.04214 ↩︎

-

Pinto, S. (n.d.). Retrieved July 4, 2024, from https://sandro2pinto.github.io/ ↩︎

-

Security & Trust: OP-TEE Open Source - FPGA IP Core Design. (n.d.). Missing Link Electronics. Retrieved July 4, 2024, from https://www.missinglinkelectronics.com/solutions/security-and-trust/ ↩︎

-

Xia, K., Luo, Y., Xu, X., & Wei, S. (2021). SGX-FPGA: Trusted Execution Environment for CPU-FPGA Heterogeneous Architecture. 2021 58th ACM/IEEE Design Automation Conference (DAC), 301–306. https://doi.org/10.1109/DAC18074.2021.9586207 ↩︎

-

Costan, V., & Devadas, S. (2016). Intel SGX Explained (2016/086). Cryptology ePrint Archive. https://eprint.iacr.org/2016/086 ↩︎ ↩︎2

-

Bellemare, S. (n.d.). qTEE: Moving Towards Secure-through-Physics TEE Chips - HackMD. Retrieved July 4, 2024, from https://hackmd.io/XfP4RKuIQrauaPS6N8g6Bw#The-Rise-of-Crypto-Physics ↩︎

-

Takarabt, S., Guilley, S., Souissi, Y., Sauvage, L., & Mathieu, Y. (2021). Post-layout Security Evaluation Methodology Against Probing Attacks. In N.-S. Vo, V.-P. Hoang, & Q.-T. Vien (Eds.), Industrial Networks and Intelligent Systems (Vol. 379, pp. 465–482). Springer International Publishing. https://doi.org/10.1007/978-3-030-77424-0_37 ↩︎

-

Ray, V. (2009, February 23). FREUD Applications of FIB: Invasive FIB Attacks and Countermeasures in Hardware Security Devices. https://doi.org/10.13140/2.1.4582.0486 ↩︎

-

Borel, S., Duperrex, L., Deschaseaux, E., Charbonnier, J., Clediere, J., Wacquez, R., Fournier, J., Souriau, J.-C., Simon, G., & Merle, A. (2018). A Novel Structure for Backside Protection Against Physical Attacks on Secure Chips or SiP. 2018 IEEE 68th Electronic Components and Technology Conference (ECTC), 515-520. https://doi.org/10.1109/ECTC.2018.00081 ↩︎

-

Weiner, M., Manich Bou, S., & Sigl, G. (2014). Defeating microprobing attacks using a resource efficient detection circuit. 1-6. https://upcommons.upc.edu/handle/2117/26536 ↩︎

-

Ling, M., Wu, L., Li, X., Zhang, X., Hou, J., & Wang, Y. (2012). Design of Monitor and Protect Circuits against FIB Attack on Chip Security. 2012 Eighth International Conference on Computational Intelligence and Security, 530-533. https://doi.org/10.1109/CIS.2012.125 ↩︎

-

Understanding Anti-Tamper Technology: Part 3. (n.d.). Rambus. Retrieved July 4, 2024, from https://www.rambus.com/blogs/understanding-anti-tamper-technology-part-3/ ↩︎

-

Gao, Y., Al-Sarawi, S. F., & Abbott, D. (2020). Physical unclonable functions. Nature Electronics., 3(2), 81–91. https://doi.org/10.1038/s41928-020-0372-5 ↩︎

-

Bellemare, S. Can we Hide Atoms? (n.d.). Google Docs. https://docs.google.com/presentation/d/1CcVM_0AFCBOpXGiDFtvf2wGz3KBG0EaoxyMXaITEWl0 ↩︎

-

Kumar, N., Mezher, R., & Kashefi, E. (2021, January 14). Efficient Construction of Quantum Physical Unclonable Functions with Unitary t-designs. https://www.semanticscholar.org/paper/Efficient-Construction-of-Quantum-Physical-with-Kumar-Mezher/c08b19554fbfc6630fefb910e1a6c6b8ef39ab96 ↩︎

-

Skoric, B. (2013). Security analysis of Quantum-Readout PUFs in the case of challenge-estimation attacks (2013/479). Cryptology ePrint Archive. https://eprint.iacr.org/2013/479 ↩︎

-

McGrath, T., Bagci, I. E., Wang, Z. M., Roedig, U., & Young, R. J. (2019). A PUF taxonomy. Applied Physics Reviews, 6(1), 011303. https://doi.org/10.1063/1.5079407 ↩︎

-

Roberts, J., Bagci, I. E., Zawawi, M. a. M., Sexton, J., Hulbert, N., Noori, Y. J., Young, M. P., Woodhead, C. S., Missous, M., Migliorato, M. A., Roedig, U., & Young, R. J. (2015). Using Quantum Confinement to Uniquely Identify Devices. Scientific Reports, 5(1), 16456. https://doi.org/10.1038/srep16456 ↩︎

-

Cao, Y., Robson, A. J., Alharbi, A., Roberts, J., Woodhead, C. S., Noori, Y. J., Bernardo-Gavito, R., Shahrjerdi, D., Roedig, U., Falko, V. I., & Young, R. J. (2017). Optical identification using imperfections in 2D materials. 2D Materials, 4(4), 045021. https://doi.org/10.1088/2053-1583/aa8b4d ↩︎

-

Galetsky, V., Ghosh, S., Deppe, C., & Ferrara, R. (2022). Comparison of Quantum PUF models. 2022 IEEE Globecom Workshops (GC Wkshps), 820-825. https://doi.org/10.1109/GCWkshps56602.2022.10008722 ↩︎

-

Arapinis, M., Delavar, M., Doosti, M., & Kashefi, E. (2021). Quantum Physical Unclonable Functions: Possibilities and Impossibilities. Quantum, 5, 475. https://doi.org/10.22331/q-2021-06-15-475 ↩︎

-

Longmate, K. D., Abdelazim, N. M., Ball, E. M., Majaniemi, J., & Young, R. J. (2021). Improving the longevity of optically-read quantum dot physical unclonable functions. Scientific Reports, 11(1), 10999. https://doi.org/10.1038/s41598-021-90129-2 ↩︎

-

Caltech (Director). (2019, July 11). Ising Machines: Non-Von Neumann Computing with Nonlinear Optics - Alireza Marandi - 6/7/2019. https://www.youtube.com/watch?v=V7BxJsLyubk ↩︎

-

Frankfurt School: On the Concept of History by Walter Benjamin. (n.d.). Retrieved July 4, 2024, from https://www.marxists.org/reference/archive/benjamin/1940/history.htm ↩︎

-

Lisp machine. (2024). In Wikipedia. https://en.wikipedia.org/w/index.php?title=Lisp_machine&oldid=1217888394 ↩︎

-

Isabelle (proof assistant). (2024). In Wikipedia. https://en.wikipedia.org/w/index.php?title=Isabelle_(proof_assistant)&oldid=1226930873 ↩︎

-

CakeML. (n.d.). Retrieved July 4, 2024, from https://cakeml.org/ ↩︎

-

GNU Guix transactional package manager and distribution—GNU Guix. (n.d.). Retrieved July 4, 2024, from https://guix.gnu.org/ ↩︎